Last update: 25/10/2022

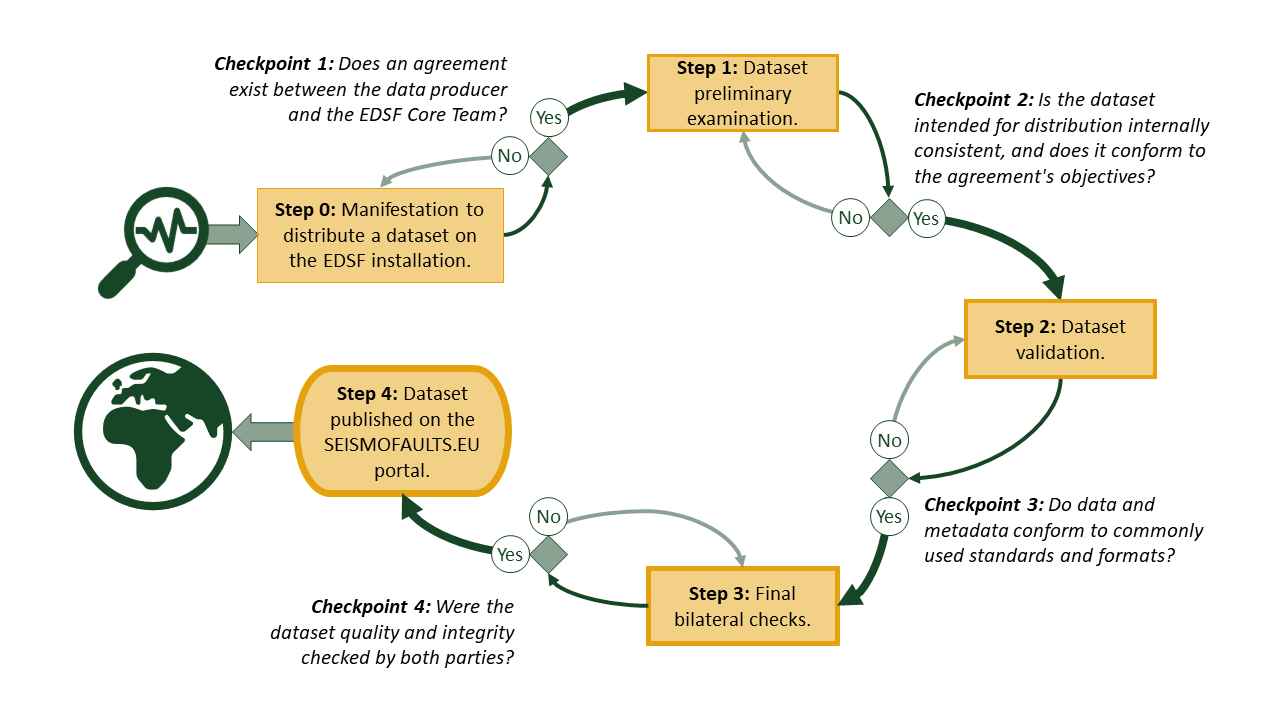

The European Databases of Seismogenic Faults (EDSF) installation operates under the auspices of the EPOS TCS-Seismology work program, particularly those of the EFEHR Consortium, and considers the principles expressed by the EPOS Data Policy. EDSF offers services that distribute data about seismogenic faulting proposed by the scientific community or solicited to the scientific community or stemming from project partnerships that involved the use or development of the EDSF installation itself. The following flowchart summarizes how the EDSF Core Team (CT) carries out quality control of the distributed data.

Description of the actions performed at each step of the Quality Control process.

Step 0: Manifestation to distribute a dataset on the EDSF installation.

The data intended for distribution must address the objectives of the EDSF installation. The data producer must confirm full ownership rights to the data product to establish a formal agreement with the EDSF CT to redistribute the data and establish a roadmap toward adopting the FAIR principles. Bypassing the formal agreement is possible when the data producer operates in the framework of a project involving both parties (e.g., a consortium agreement of a European project). The data ownership, authorship, or any other right, obligation, or restriction remains with the data producer. Importantly, none of these rights or obligations can be transferred to the EDSF CT by any means, assumed or implied, neither during the Quality Control process nor after its completion, regardless of its outcomes.

Checkpoint 1: Does an agreement exist between the data producer and the EDSF CT?

Step 1: Dataset preliminary examination.

The data producer shares the dataset intended for distribution with the EDSF CT. The data should be made available on a platform/system and using formats that allow the EDSF CT to examine it without further interventions by the data producer. Proper documentation detailing the data structure should accompany the data submission. If the data description does not conform to the submitted data, the EDSF CT invites the data producer to fix the issues before proceeding to the next step. At this stage, the data sharing between the involved parties remains confidential.

Checkpoint 2: Is the dataset intended for distribution internally consistent, and does it conform to the agreement's objectives?

Step 2: Dataset validation.

The EDSF CT checks and validates the data and metadata, ensuring that they conform to commonly used standards and formats. They also identify if any inconsistency in the data structure exists. The documentation should comprehensively describe the dataset properties and all its components. It should also address the dataset's potential limitations.

Checkpoint 3: Do data and metadata conform to commonly used standards and formats?

Step 3: Final bilateral checks.

The EDSF CT prepares the web services in the formats prescribed by the installation. The EDSF installation uses the Open Geospatial Consortium (OGC) standards for data containing geospatial information. The EDSF CT and the data producers interact to verify that the web service distribution conforms to the submitted data. Once the web services are approved, they become available on the SEISMOFAULTS.EU portal with restricted access for the final quality and integrity check and an additional check done by the data producer.

Checkpoint 4: Were the dataset quality and integrity checked by both parties?

Step 4: Dataset published on the SEISMOFAULTS.EU portal.

At this step, both parties have performed all the necessary checks. The data producer and the EDSF CT agree to publish the dataset web services. The EDSF CT affixes the Creative Commons 4.0 CC-BY license to the distributed dataset without further licensing information and removes all the access restrictions to the dataset. A recognized organization (e.g., DataCite) must have minted a Digital Object Identifier (DOI) that identifies the dataset. If a landing page linked to the DOI does not exist, the EDSF installation can provide space to host it. Any web page or link to the data, metadata, technical documentation and any other relevant information connected to the dataset initially covered by confidentiality should also be made publicly available. The data distribution becomes public.